Data Streaming Overview

Streaming data is a continuously generated type of information from thousands of data sources, which typically send in the data records simultaneously, and in small sizes. Streaming data includes a wide variety of data such as log files generated by customers using your mobile or web applications, e-commerce purchases, in-game player activity, information from social networks, financial trading floors, or geospatial services, and telemetry from connected devices or instrumentation in data centers.

This data needs to be processed sequentially and incrementally on a record-by-record basis or over sliding time windows and used for a wide variety of analytics including correlations, aggregations, filtering, and sampling. Information derived from such analysis gives companies visibility into many aspects of their business and customer activity such as –service usage (for metering/billing), server activity, website clicks, and geo-location of devices, people, and physical goods –and enables them to respond promptly to emerging situations. For example, businesses can track changes in public sentiment on their brands and products by continuously analyzing social media streams, and responding in a timely fashion as the necessity arises.

Streaming data processing is beneficial in most scenarios where new, dynamic data is generated continually. It applies to most of the industry segments and big data use cases. Companies generally begin with simple applications such as collecting system logs and rudimentary processing like rolling min-max computations. Then, these applications evolve into more sophisticated near-real-time processing. Initially, applications may process data streams to produce simple reports and perform simple actions in response, such as emitting alarms when key measures exceed certain thresholds. Eventually, those applications perform more sophisticated forms of data analysis, like applying machine learning algorithms and extracting deeper insights from the data. Over time, complex, stream, and event processing algorithms are applied, like decaying time windows to find the most recent popular movies, allowing the further enrichment of the insights.

Why Golang at ProntoPro

Within ProntoPro's service platform we have a lot of microservices that have been developed in Golang. The Backend team is constantly working with this technology and benefits from all the features that this kind of tool provides, such as:

- Simplicity and Development Velocity — I have kept this as the first feature. Unlike other languages, Golang doesn't get competitive to make itself feature-rich, instead, it makes readability and maintainability its priority. The makers of Golang add only those features to the language which are relevant and do not make the language complex by adding several things. You will be able to get a feel of how simple Golang is when you will start working with it. And if you are going through someone else's code in Golang, no matter how large the code base is, every line will be very much readable and understandable.

- Powerful Standard Library — Golang has a rich set of library packages, which makes it easier for you to write your code.

- Concurrency in Golang — Golang provides Goroutines and channels to deal with concurrency. Concurrency helps in making use of Multi-processor architecture efficiently. Concurrency also helps in better scaling of large applications.

- Web Application Building — Golang is becoming popular as a web application building language because of its simple constructs and faster execution speed. There are tons of tutorials out there on the internet, you can just start with any of them.

- Testing Support — Golang provides a way to test the package that you write. With just the “go test” command you will be able to test your code written in “_test. go” files. For making any program reliable, testing is a must, so you should add a test function along with the actual function each time you write some code.

- Speed of Compilation — Well this is the point where Golang wins the hearts of many as its speed of compilation and execution is much better than many famous programming languages.

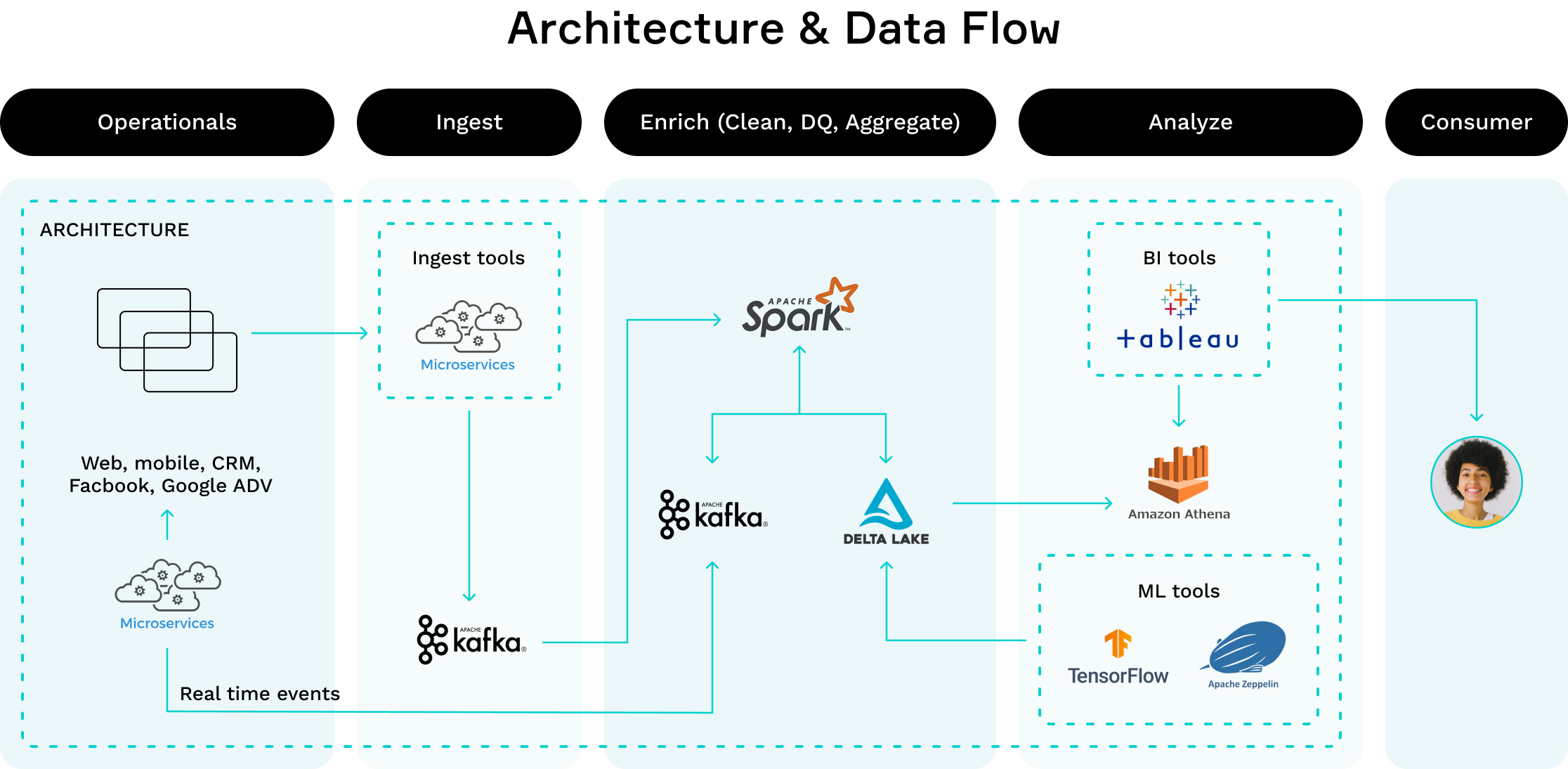

Our Analitycal Streaming Architecture

The Components of a Streaming Architecture

- The Message Broker — This is the element that takes data from a source, called a producer, translates it into a standard message format, and streams it on an ongoing basis. Other components can then listen in and consume the messages passed on by the broker.

- Ingestion and Delivery Data Microservices — The stream processor collects data streams from one or more message brokers. It receives queries from users, fetches events from message queues and applies the query, to generate a result. The result may be an API call, an action, a visualization, an alert, or in some cases a new data stream.

- Data Analytics Engine — After streaming data is prepared for consumption by the stream processor, it must be analyzed to provide value. There are many different approaches to streaming data analytics. Here are some of the tools most commonly used for streaming data analytics.

- Streaming Data Storage — A data lake is the most flexible and inexpensive option for storing event data, but it has several limitations for streaming data applications. Upsolver provides a data lake platform that ingests streaming data into a data lake, creates schema-on-read, and extracts metadata. This allows data consumers to easily prepare data for analytics tools and real time analytics.

Streaming Ingestion and Delivery Data Microservices with Golang

When we started designing the data platform, we evaluated a lot of technology stacks that are the de-facto standard in the data world. Scala/Akka/Kafka-Streams are the most comfortable and natural choice for this kind of use case. At ProntoPro, however, there is a very strong culture and passion around Golang. All platform/product services are developed with this tool, the Backend team is prepared to face projects and use cases using this stack, and the unique development speed and concurrency features (very used in streaming data) made us converge towards the use of this language.

We started our journey with an ingestion use case related to logs stored in Grafana Loki. We wanted to ingest inside the data platform to analyze them and provide SEO-related data to the marketing department. We developed the first streaming data service consuming the logs in near real-time from a WebSocket stream. After decoding, we saved the logs to AWS S3 to allow the analytics phase (Apache Spark) to perform the necessary cleanup and enrichment for our SEO tool. The development was fast and the testing phase was very quick thanks to the extreme compilation performance and lightness that helped us to maintain a lean and efficient development cycle. The entire data team was able to work on a Golang codebase in a few days, starting from scratch.

Another important use case we're going to address in the future is the delivery of preprocessed information, obtained by consuming a Kafka topic. One thing that surprised us is the good number of libraries ready for the data world, such as:

- Apache Kafka client: https://github.com/Shopify/sarama

- Stream processing: https://github.com/lovoo/goka

- Avro: https://github.com/linkedin/goavro

- Parquet: https://github.com/xitongsys/parquet-go

- Dataframe: https://github.com/rocketlaunchr/dataframe-go

- Protocol Buffers: https://github.com/golang/protobuf

Conclusion

Our journey into streaming data services has begun and Golang is giving us every opportunity to work fast by delivering the services we need quickly. The data team works with the same technology stack as the product team and this allows us to contaminate each other while maintaining the same standards and technology vision. Golang's features are optimal for data use cases and we're not having any difficulty meeting the technology challenges that the business presents. I believe this technology shift has greatly benefited the team and the entire ProntoPro technology ecosystem.